GPT vs Traditional NLP Models

The field of Natural Language Processing (NLP) has seen remarkable advancements in recent years, and the emergence of the Generative Pre-trained Transformer (GPT) has revolutionized the way NLP models operate. GPT is a cutting-edge language model that employs deep learning to generate human-like text. Unlike conventional NLP models, which required extensive training on specific tasks, GPT is pre-trained on vast amounts of data and can be fine-tuned for various NLP tasks.

GPT made years of NLP research outdated

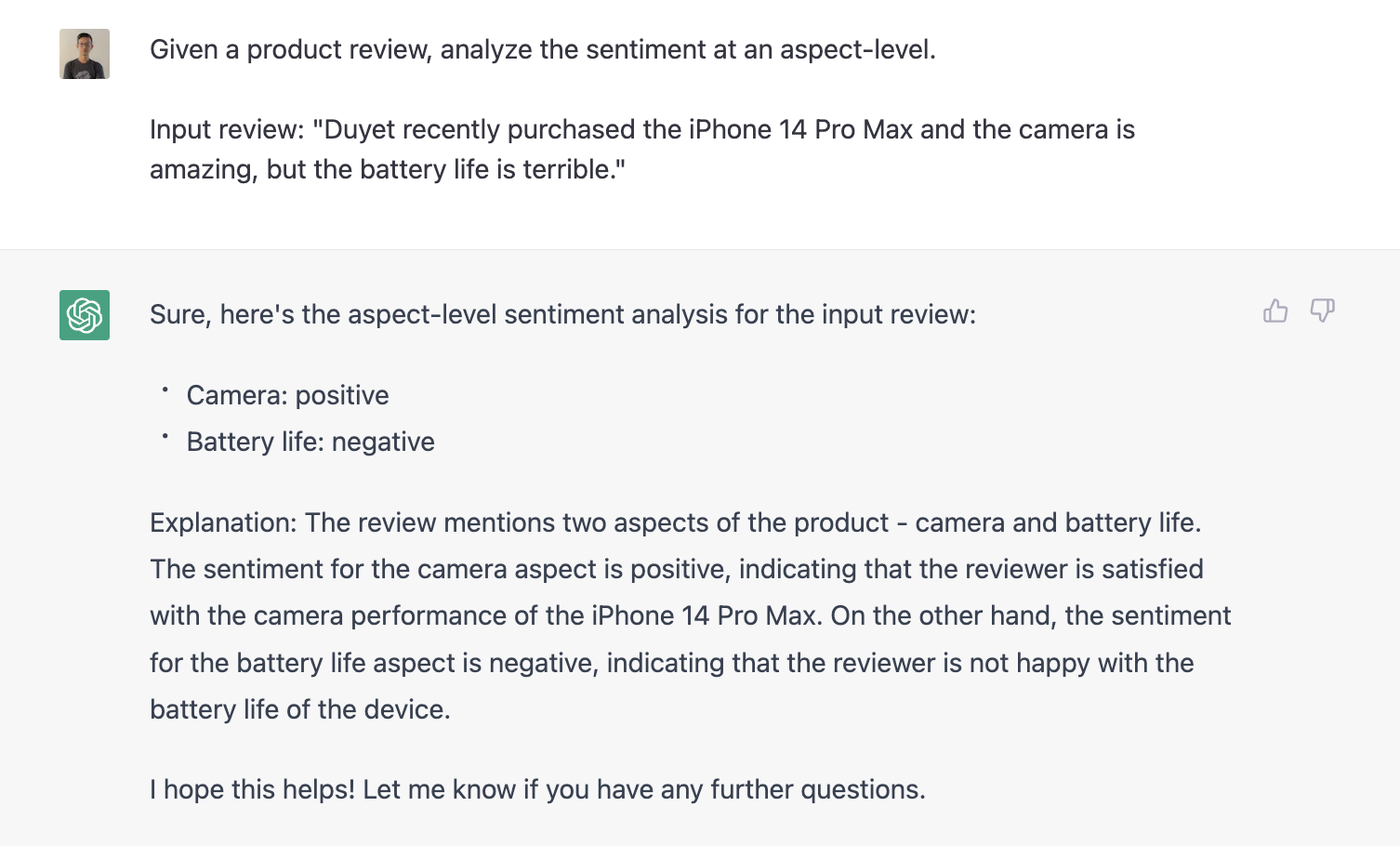

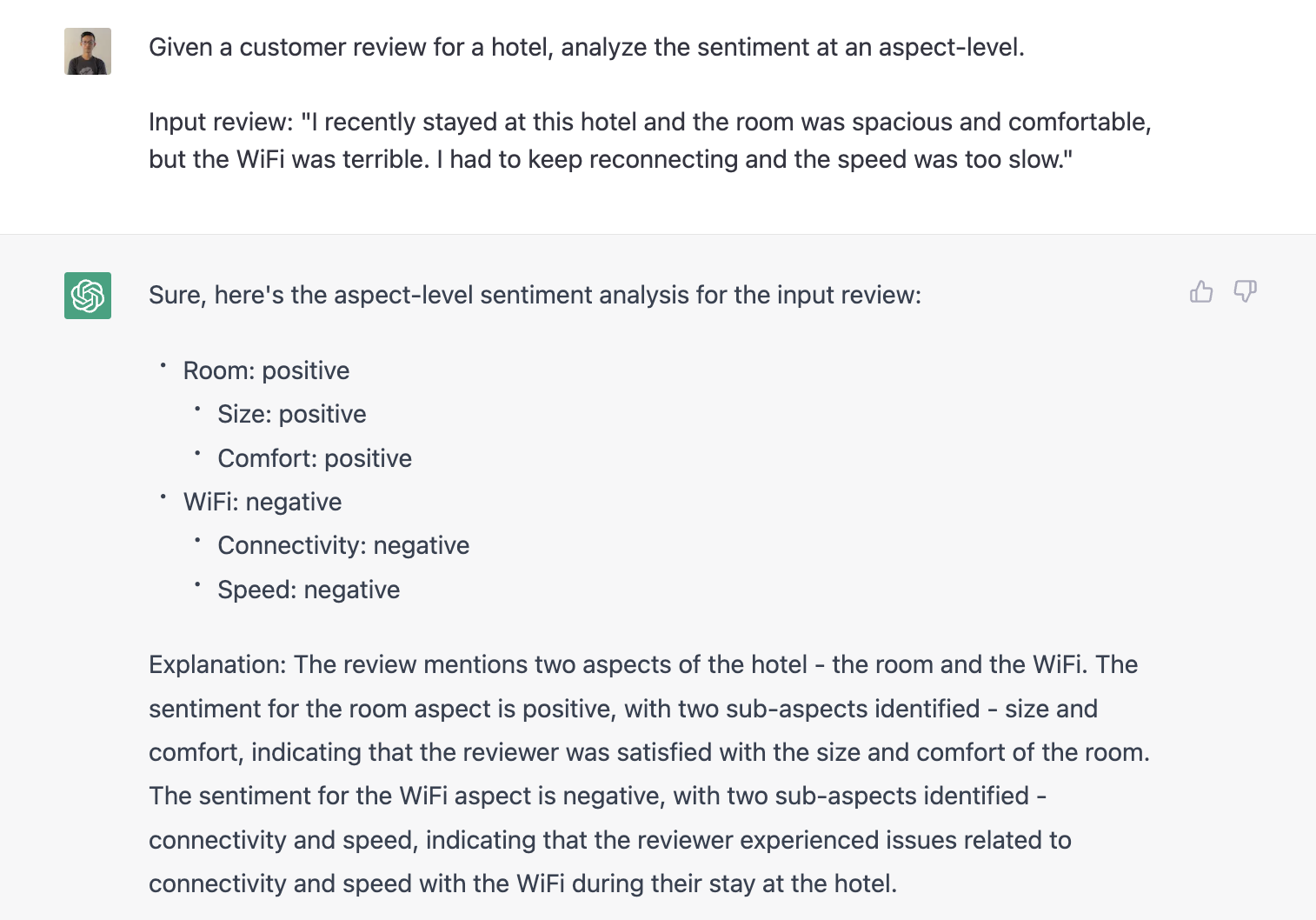

Sentiment Analysis

Sentiment analysis involves identifying the emotional tone behind a text. GPT has made significant strides in this area by performing sentiment analysis at the aspect level.

Aspect-level sentiment analysis goes beyond analyzing the sentiment of the entire text and delves into specific aspects or features of a product or service. For instance, when analyzing hotel reviews, you might want to determine the sentiment of the food, the rooms, or the service. GPT makes this task much more straightforward and accurate by analyzing large amounts of text and identifying the sentiment of specific aspects.

More complex

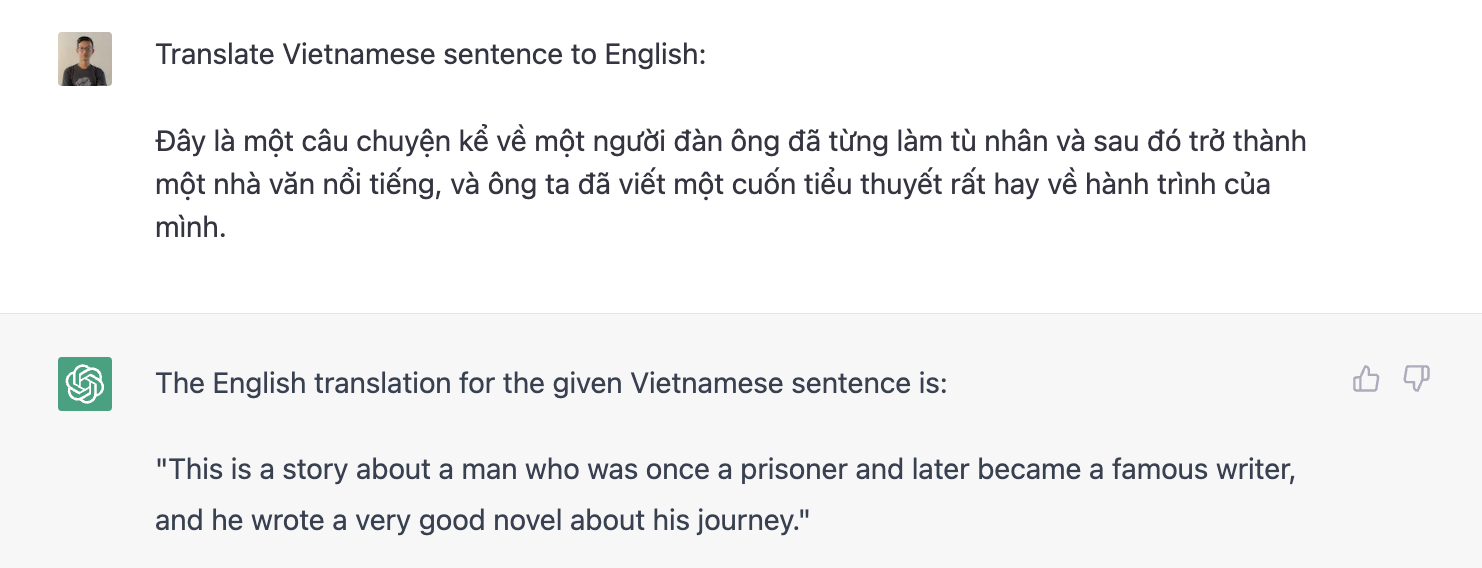

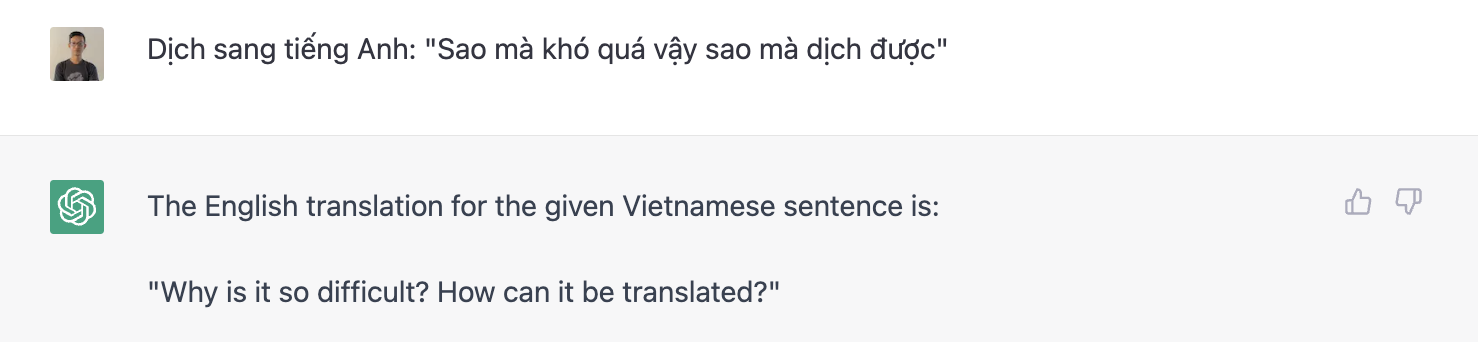

Language Translation

Language translation has always been a challenging task for NLP models. However, GPT has shown remarkable results in this area as well. With its ability to generate human-like text, GPT can perform language translation tasks quickly and accurately. For example, it can translate a text from English to Vietnamese or vice versa with high accuracy.

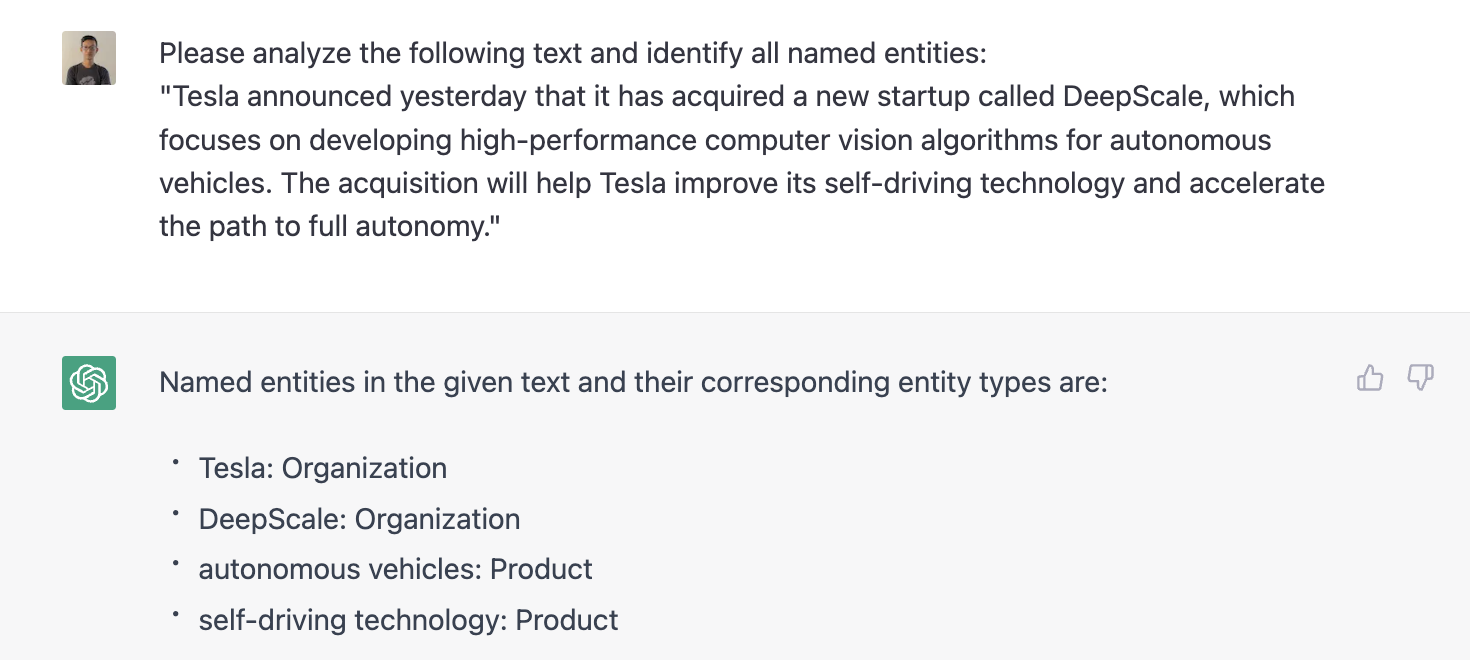

Named Entity Recognition

Named Entity Recognition (NER) involves identifying and extracting named entities from a text. Named entities can be anything from people's names to organization names, and it is essential to extract them accurately for many NLP tasks. GPT has shown remarkable results in this area as well. With its ability to understand the context of a text, GPT can extract named entities accurately and quickly.

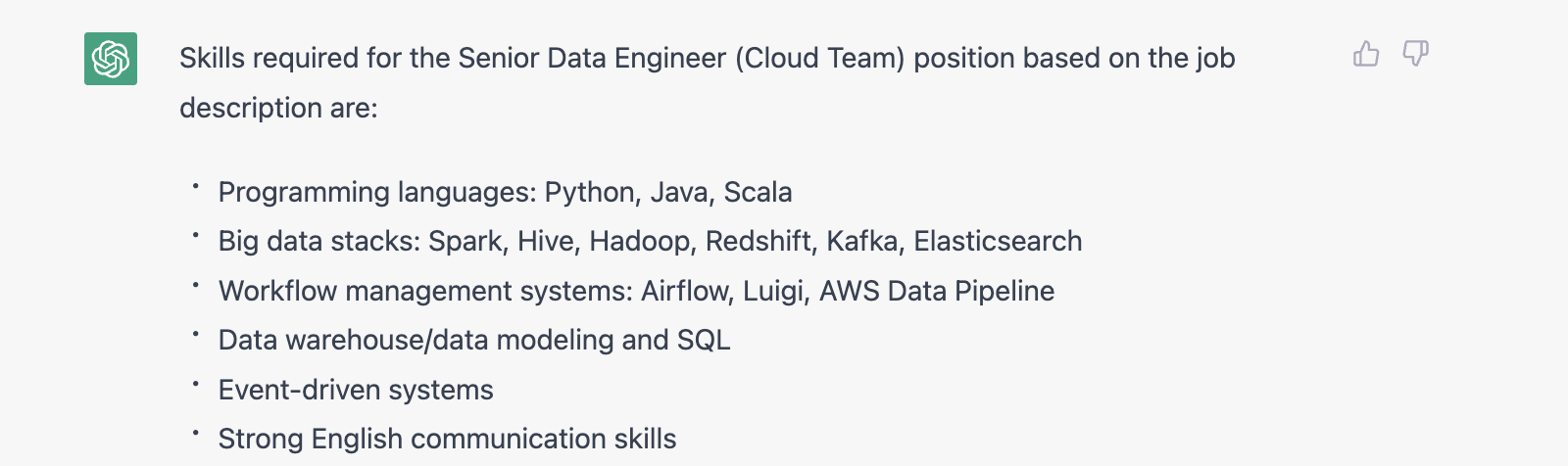

My Old Thesis Work: Skill extraction and Skill2vec

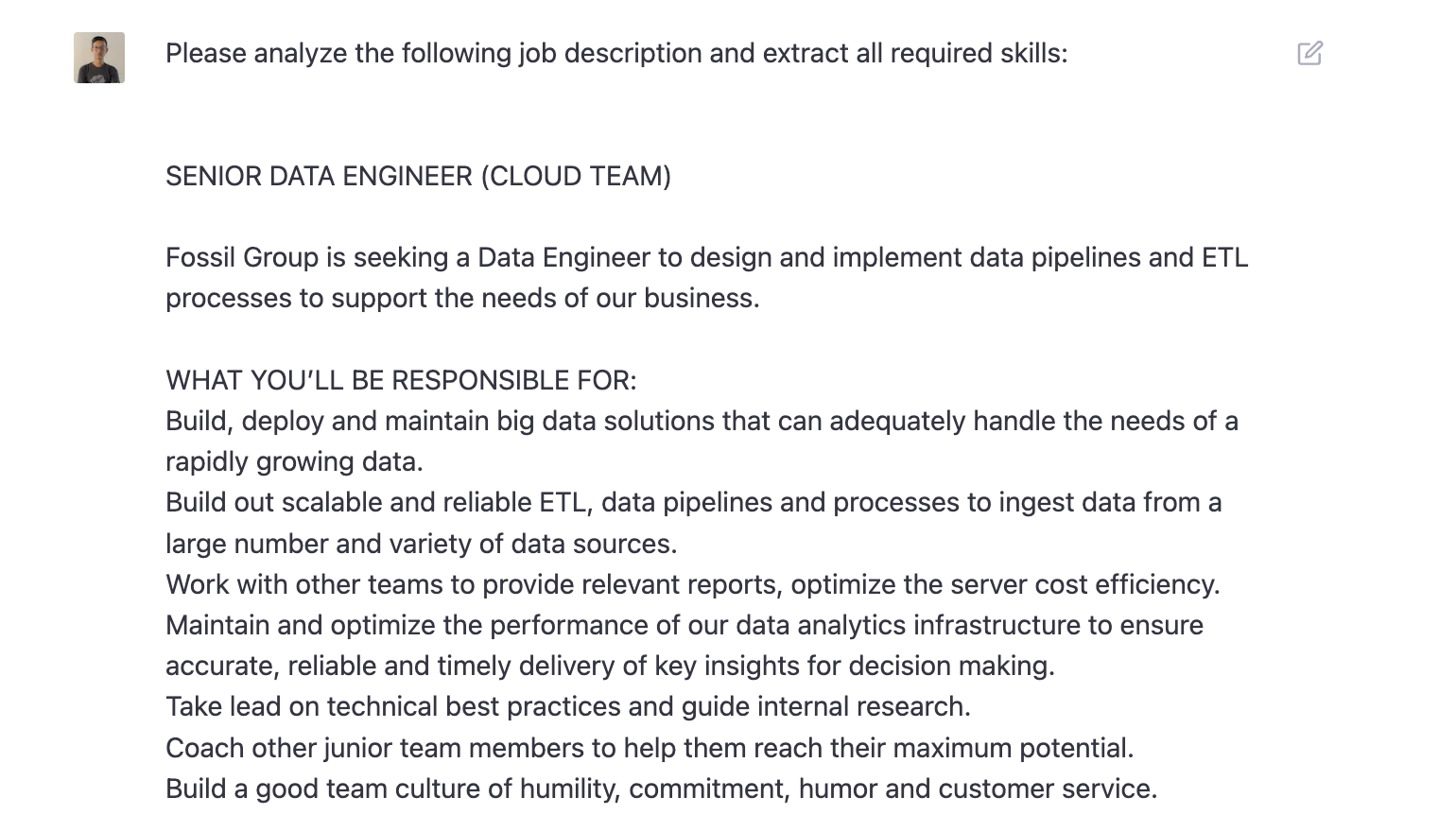

Back to my old thesis work on skill extraction and skill2vec models. Skill extraction involves identifying skills mentioned in a person's resume or CV, which can then be used to match job openings. I used word2vec models to extract similar skills to help HR systems find relevant skills. GPT's language generation capabilities, it can perform skill extraction and matching tasks without the need for separate word2vec models. This allows for faster and more accurate matching of skills, making the process much more efficient. Hmm

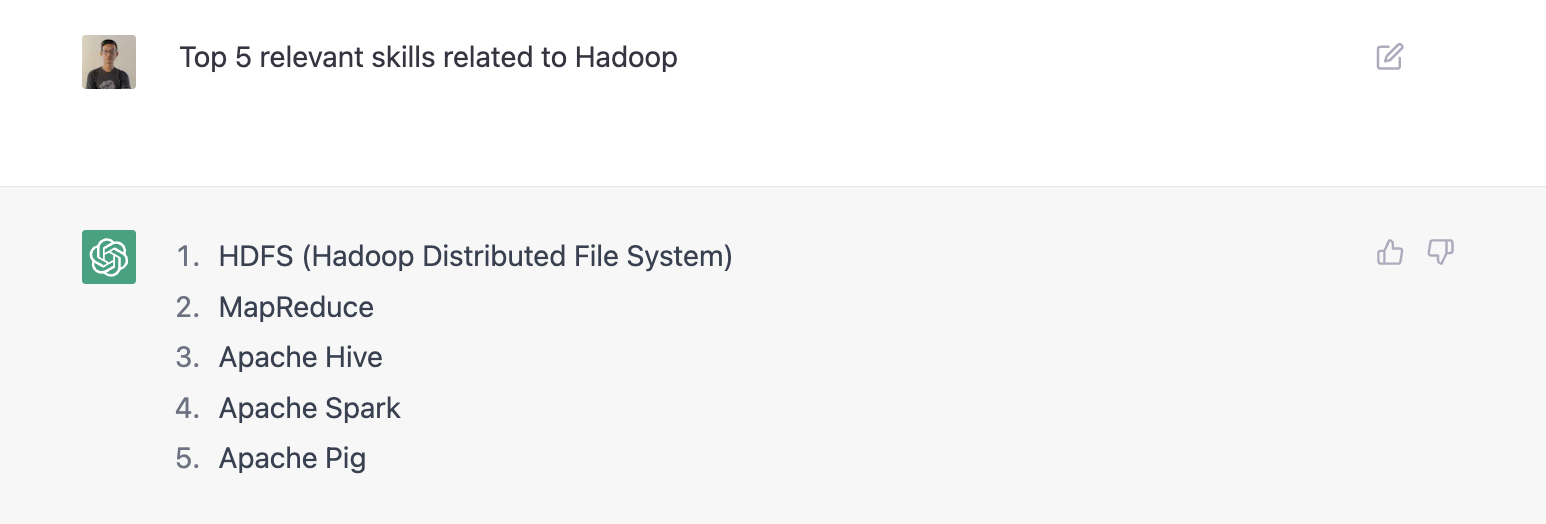

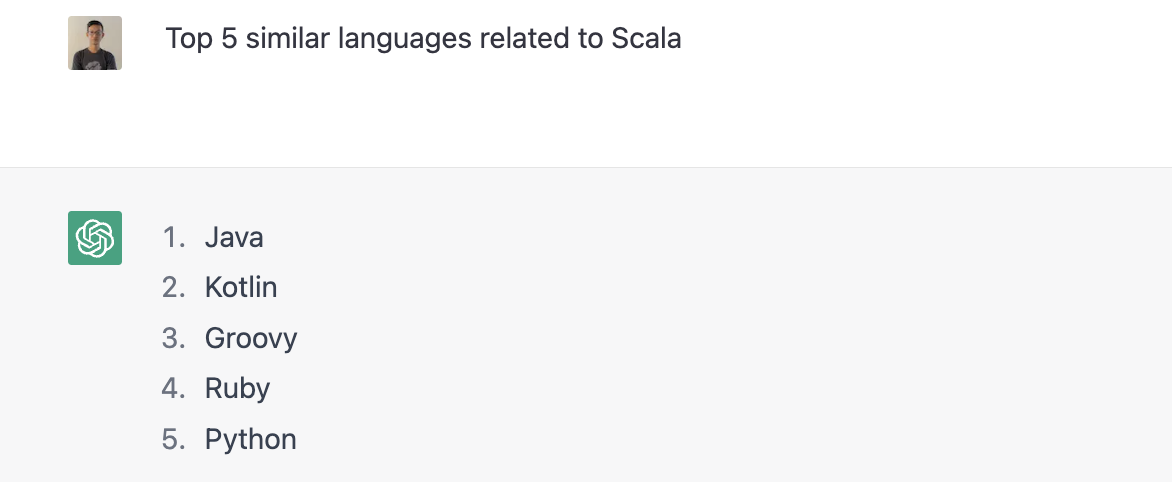

And the response:

Let see my old paper about query top 5 relevant skills

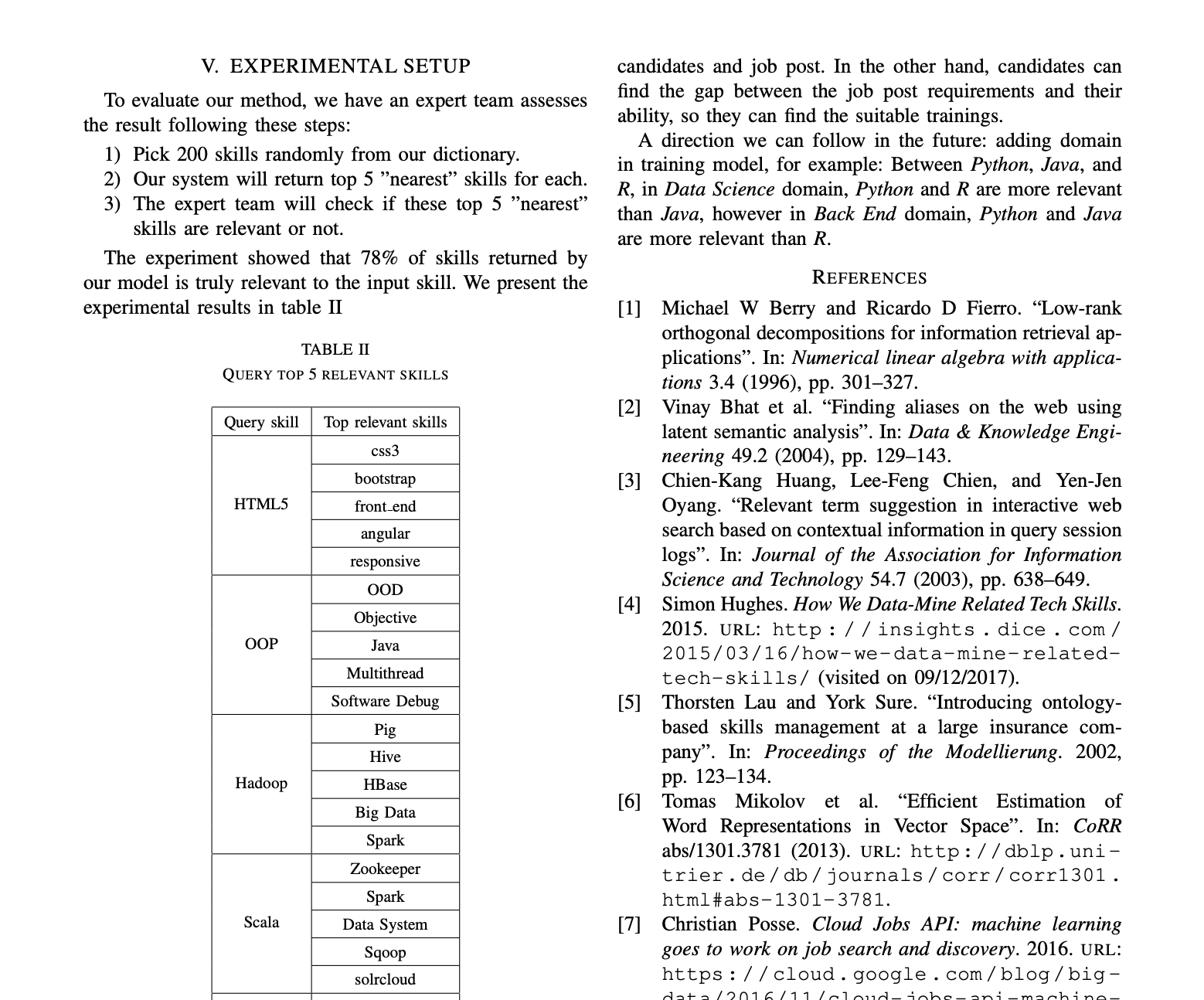

And now with ChatGPT

Hmm ...

What do you think?